The Problem with Serialization

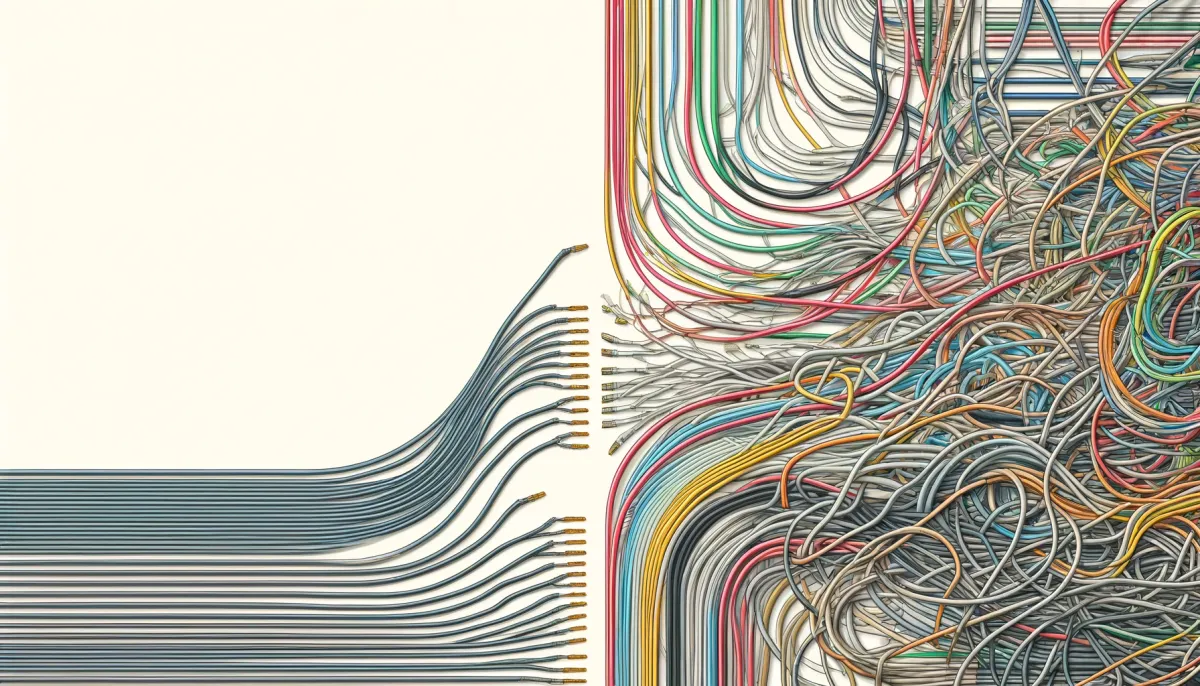

In my last post, I talked about effect sequencers, which wraps up the discussion on my syntax for universal computation. Now I want to transition to talking about another important part of programming, building data structures. It may seem a roundabout way to get to it, but the first thing I want to tackle regarding data structures is the difficulty posed by serialization and deserialization (which I will mostly just refer to as serialization). In particular, communication between different programming languages using serialized data structures is full of complications.

In developing the Ultraviolet Virtual Machine, one of the nice things to have would be a way to remotely control VMs over a network connection for facilities such as loading and debugging programs. For embedded implementations, serial communication is usually used, although there are more devices coming out that allow for programming them over the Internet with TCP sockets. An Ultraviolet VM running in a web browser might use WebSockets. Fortunately, all of these communication mechanisms allow for streams of bytes, which is a start! Unfortunately, that is where the layer of shared abstraction between most platforms ends. I have designed a great many network protocols, and I am favorable to the humble and venerable remote procedure call (RPC) for provide the application layer. A tool that we have explored in past posts, Clockwork, will do all of the code generation for us to have an RPC interface. It's pretty straightforward to use. You have a class with some functions you want to call and Clockwork will generate everything else, including the following:

- Server-side code to deserialize byte streams into RPC requests, execute the server-side logic, and then serialize the response

- This includes all of the network API calls, serialization, and deserialization

- Client-side code that provides an identical API to the server-side logic, but delegates methods to the server

- This includes all of the network API calls, serialization, and deserialization

- All of the types required to make this work

- Including implementing all necessary functions on the types, such as serialization and deserialization implementations when they compiler won't give those to you automatically.

Clockwork is pretty handy, but where it is lacking is in the serialization layer, which uses the incredibly popular and incredibly limited JSON serialization format (with support for CBOR under development). Limiting ourselves to JSON has some real drawbacks, but it's also hard to do better for a variety of historical and cultural reasons, which is what this post is about.

I have accumulated (too) many hours of experience working with serialization formats through my career. The best I have ever used is JSX, a type of XML serialization for Java. It used secret escape hatches in Java to serialize all Java objects with no modification to your Java code and it was truly magical. My second favorite was Twisted's PB for Python, which is a very well-considered and mature serialization protocol. It is not python-specific in its specification and I once ported it to Java. Since then, things have gone downhill in the serialization world, with a "standardization" on a JSON that is anything but standardized, featuring several incompatible flavors and a distressing lack of documentation in serialization implementations about what flavor they support. My pick of the current popular serialization protocols is CBOR. However, it still has issues, although not as many as JSON. There are some significantly different directions you can go with serialization, such as Python's pickle or Protocol Buffers (not to be confused with Twisted's unrelated PB), and these have their own problems. Lots of problems to discuss!

Let's look at why JSON is less than optimal, and then use that as a launching point for some deeper philosophical issues. I'm talking here about classic JSON. There are multiple flavors, often undocumented, so what I'm saying might seem like it doesn't apply to your flavor, which could in fact be a layer of semantic interpretation on topic of classic JSON. More on that later!

JSON is just the literal notation for Javascript values. As such, it is limited to a very small set of Javascript basic types and type constructors. Javascript only has one kind of number, a floating point number, so all JSON numbers are floating point. Similarly, there is no literal syntax for byte arrays in Javascript, so JSON lacks a built-in byte array type. There are similar arbitrary limitations on the type constructors. There are only two provided: lists and dictionaries. This brings us to the first problem of serialization: it is often language-specific. This is the case for things like Python's pickle and Go's gob, as well as Javascript's JSON. This makes it okay for persisting data that is going to be saved and loaded by the same program, but not good for communicating between programs written in different languages. Unfortunately, JSON has become established as just the way to do that, for instance between web browser and web servers (not all of which are written in Javascript). Specifically, JSON lacks types that are basic in other languages, such as Swift's Data (a byte array), UInt32 (32-bit unsigned integer), Set, etc. This lack of types can take you in two different directions. One option is to just use JSON types for everything and then write code in your application to translate between a JSON data structure and your local data structure. The other is to automatically map between local types and JSON types. The latter option is what Swift does with its Codable interface. If you try to serialize a Data into JSON, you get... something. Take a guess at what it might be. I'll give you hint: it's some kind of string. The implementation details of the Data/JSON mapping are hidden within the implementation of the Data type and can only be uncovered through experimentation (or reading the source code, if you have it). What you have here is basically a proprietary, undocumented serialization format on top of JSON. So do all languages serialize byte arrays (and all other non-Javascript types) into the same form of JSON-based constructions? Of course not! How could they, when the implementation choices are hidden? So can we just ignore these high-level encodings and just deal with things at the JSON level? Not at all! It is a leaky abstraction that is sure to cause problems. So I'll spoil the surprise and save you some research, Data gets encoded as a JSON-flavored base64-encoded string. This means that when you're deserializing it, if you don't already have a separate set of schema information to know that this specific string is actually supposed to be deserialized into a Data, then the naive way to deserialize it would be as a string, which is simply incorrect and can cause a variety of problems in your application. To make matters worse, base64 also has multiple flavors and the base64 encoding used by Swift's Codable implementation for Data is incompatible with some base64 decoders due to the JSON-flavored base64 (escaping "/" as "\/", where "\" is not a valid base64 character in other flavors of base64). Therefore, even if you know to base64-decode this string, you may still have problems. These are all real problems that I have had trying to get work done using JSON as a way to send data structures as JSON over the network between two applications written in different languages. These are just the gotchas I have actually encountered, because the ones that haven't happened to me personally I have no way of even knowing about since they are undocumented!

Okay, so we just need to note which JSON strings are actually encodings of Datas and decode them correctly, right? We just need to keep that schema information in the serialized data. Well unfortunately, Swift's Codable implementation of JSON encoding drops the information about the original type being a Data. Since it's not in the serialized data, the deserializer needs to know this information ahead of time, from some other source. This leads us into the second problem with serialization: it often throws out type information which is critical to the correct semantic interpretation of the serialized data. Worse yet, it's not so simple as just to provide an annotation (via some as hypothetical syntax) that the given string is actually a Data, because "Data" as the name for a byte array is specific to Swift. In Go, we might want "[]byte" and in Kotlin we might want "ByteArray". How do we handle mappings between these local, language-specific types and a hypothetical multi-language standardized serialization format? Well, the only solution really is to hold onto this type information during serialization and then during deserialization map this to an appropriate local type.

This problem is significant already, because there are a lot of details to figure out, but it gets worse. So far we have only been talking about basic types that are likely to exist in each language. However, we also have to handle custom, user-defined types that are built with type constructors, in other words data structures such as lists, records, enumerations, etc. Supporting this is tricky for several reasons. First, the available type constructors vary between languages. Even the humble list (as from lisp) is not universally supported as dynamically-languages sometimes allow for mixed arrays, whereas arrays in statically typed languages tend to be restricted to that are all of the same type. Even more problematic is that, while most languages have some sort of record syntax (also know as product types), the support for sum types is all over the place. This is important because an array of a sum type is how you would generally create mixed arrays in a statically typed language. Even enumerations with associated values, the typical way that you would emulate sum types when they are missing from the language, are not universally supported.

The approach to user-defined types when it comes to serialization is interestingly diverse. JSX serialization for Java could handle all Java objects, without modification to the Java source code for those objects. Swift allows any of your types to implement the Codable protocol, which makes them serializable using any serializer familiar with Codables (although there are few implementations from which to choose). Go and Python both support arbitrary data structures using their own native serialization formats (gob and pickle, respectively), but only support a more manual interface for JSON serialization. This gets to the next problem, which is that the serialization implementations that can do all types tend to use special magic that is not easily available and understandable to developers wanting to implement new serialization formats. This special magic is generally reserved for the language-specific serialization format, which is of course not easily portable to other languages. If this were not the case, then we could just pick the language with the best serialization format and implement that format as a library for every other language. I have certainly tried to go this way and I found it to be impractical. Due to the missing or poorly documented mechanisms for getting into the internals of data structures so that user-defined data structures can be serialized, writing universal serializer requires some deep language expertise in every language, in addition to deep knowledge of the often poorly documented serialization format. This makes it quite a hard path to follow to completion.

Let's now summarize the issues:

- Language-specific serialization formats are not portable between languages

- Language-specific data structure encoding semantics implemented on top of a shared serialization format are not portable between languages

- User-defined data structures are not portable between languages

- There is a different set of base types in each language

- There is a different set of type constructors in each language

- Standard serialization formats throw away necessary type information

It seems to me that a lot of the issues here are related to types. We essentially have one local type on the serializer side and we want to turns this into bytes that can then be deserialized on the other side into a potentially different type that's local to the deserializer. We also want to support user-defined data types, which are created using type constructors alongside with the base types. So what we need, then, is a type system designed for serialization work. This is one of the fundamental directions that serialization protocols can go, with this approach being exemplified by Protocol Buffers, Thrift, and Avro. In these protocols the user defines user-defined types in a new type specification language and code for serialization and deserialization is generated for each target language, based on these user definitions of types. These schemes also have their own issues, one of which is that they provide a set of base types that does not always correspond to the base types of each target language, but they resolve many of the issues with the other approaches discussed.

My proposed solution is to develop a type system specifically for serialization work. It will have type constructors to allow for user-defined types. However, it will have only a minimal set of base types. Existing schema-based serialization systems attempt to emulate the types found in the target languages, which has the issue that the types don't always match up well. By using lower-level basic types and then building them up with type constructors, my hope is to build something universal across languages. Additionally, the schema language will allow for the specification of how user-defined types map to local, language-specific types.

I've done some experimental work on this idea under the Daydream project. This is just my notebook for working out concepts and not intended to be used for anything. It's of interest here mainly for the proposed syntax for building up user-defined data structures. Here are some examples:

True: Singleton

False: Singleton

Boolean: Enum True False

ListNatural: List Natural

Datum: Record Length ListNaturalThe type constructors are Singleton, Record, Enum, and List. There is one base type, Varint, which represents both integers (including big integers) and byte arrays. I hope you can already see how this differs from other schema languages, such as Protocol Buffers.

When you compile this schema, you get a lot of interesting stuff back. First, the compiler will determine an efficient representation for your schema. Since everything is built up from the schema, there is no fixed representation for common types like strings, floats, bools, and int32s. The serialization format depends entirely on your schema. The schema compiler takes as additional input a mapping of which user-defined data structures map to already defined local data structures in your code (on both the serializer and deserializer side). For mapped data structures, serialization and deserialization code will be generated, whereas for unmapped data structures the code for implementing the data structure itself will also be generated. What you end up with is what we wanted to get to originally: data structures that can be sent between programming languages as byte streams. These are just plain data structures in each language. All data structures are supported, including user-defined data structures. The goal is to be a universal serialization format, as well as a universal format for specifying data structures in a cross-language way.

To be fair, there are some downsides to this approach as well. Universality is in conflict with specialization. While Daydream will generate data structures for you, it cannot be as expressive as the local programming language. However, I think an important thing to remember is that you don't need to use it for all of your data structures, only the ones that you want to send over the wire. I think that there is a natural logical separation between data structures meant for communication and those meant for holding internal state. In some sense this means you still need an ORM-like layer in your application to translate between communication and internal state. However, I feel like the affordances here are nicer than writing custom logic to move between state and, say, nested JSON dictionaries.

This post was somewhat of a diversion from describing our language for writing audio effects, but it sets up my ideas for how to create a syntax for writing user-defined data structures, so in my next post I'll get into that in more detail.